Amplifying Trust: My Multi‑Year Collaboration with IBM on Ethical AI

Jun 28, 2025

Written by Sabine VanderLinden

From AI Agents Functionality to Trusted AI Narratives

In July 2023, on a sunny day at Wimbledon’s Centre Court, IBM wasn’t just serving tennis insights – it was showcasing the power of trustworthy AI. Deep in the tournament's “Engine Room,” IBM's AI models, acting as advanced AI agents, analyzed data to predict match outcomes, explain player performance, and enhance fan experiences.

These AI applications in business and sports demonstrate how AI can deliver actionable insights and drive decision-making. The Wimbledon environment provided a dynamic operational context for IBM's AI, highlighting how such systems adapt and perform in real-world settings.

I, Sabine VanderLinden, stood alongside IBM's impressive experts, capturing these moments and translating them into inspiring stories for business leaders located all around the world.

This scene exemplified a pivotal shift in IBM's approach to artificial intelligence: moving beyond what AI can do, to proving why it can be trusted. Over the past four years, my influencer collaboration with IBM has been anchored in this theme – ensuring that IBM's AI innovations are communicated with a focus on ethics, transparency, and governance.

With growing interest in trustworthy AI among business leaders and the public, I shaped and injected thought leadership and credibility into high-stakes enterprise AI conversations through the ‘Amplified Success’ pillar of our DIVAAA framework (Discover, Investigate, Validate, Adopt, Activate, Amplify). The result is a compelling case study in how strategic storytelling and amplification can elevate a tech giant's narrative from mere functionality to trusted innovation.

IBM's Shift to Ethical and Explainable AI

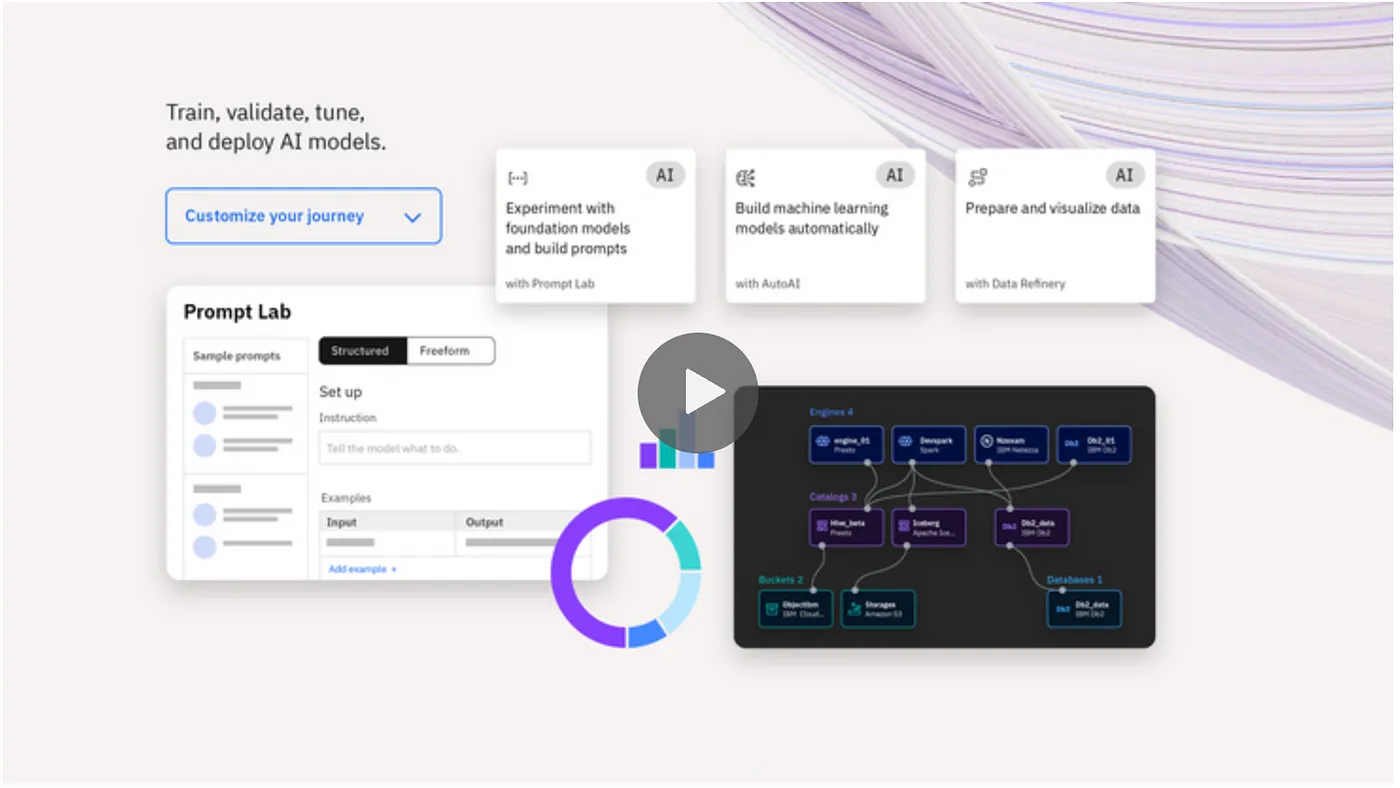

IBM has long been a pioneer in AI, from early Watson™ breakthroughs to today's cutting-edge watsonx platform. But as AI systems grew more powerful, IBM recognized that widespread adoption hinged on trust, accountability, and understanding. Enterprises and regulators alike began demanding that AI be explainable, fair, and used responsibly.

“In general, we don’t blindly trust those who can’t explain their reasoning. The same goes for AI,” IBM states plainly in its AI ethics guidelines.

Indeed, IBM's official stance is that companies “must be able to explain what went into their algorithm’s recommendations. If they can’t, then their systems shouldn’t be on the market.”

This ethos underpinned IBM's shift from marketing AI purely on capability to marketing it on trust and transparency. Explainability is crucial because AI systems must be able to describe their reasoning and the knowledge they use to make decisions, which is often derived from carefully curated training data. AI is increasingly used for complex problem solving, making transparency even more important.

Internally, IBM established robust AI ethics principles and released tools like IBM AI Explainability 360 and Watson OpenScale to ensure AI models can be interpreted and monitored. These tools serve as essential resources for building trust and transparency in AI deployments. More recently, IBM's launch of the watsonx portfolio built trust into the product line itself by creating solutions that prioritize explainability and ethical use.

For example, IBM watsonx.governance is explicitly designed to:

“Automate governance to proactively manage AI risks, simplify regulatory compliance and create responsible, explainable AI workflows. source”

This includes maintaining control over AI systems to prevent unintended behaviors and ensure human oversight.

In other words, IBM is baking explainability and ethical guardrails into its flagship AI solutions from the start. Large Language Models (LLMs), pivotal in generative AI, play a significant role in this ecosystem by generating human-like text, which enhances the transparency and usability of AI systems. I very much enjoyed my chat with Dawn Herndon, the executive who has the best job at IBM today... I think.

This positioning is timely. A late-2024 IBM Institute for Business Value report noted that 80% of business leaders see issues of AI explainability, ethics, bias or trust as major roadblocks to adoption. At the same time, 75% of executives now view strong AI ethics as a key market differentiator that sets companies apart. For IBM’s clients – CEOs, CMOs, CTOs, and CROs of global enterprises – the message was clear: embracing ethical, explainable AI is no longer optional. It's a business imperative for innovation, risk management, and brand reputation.

The Amplified Success Approach: Thought Leadership Meets Storytelling

To turn this principle into an external narrative, IBM partnered with industry thought leaders like me.

As, a seasoned executive and innovation ambassador, I wanted to bring a unique blend of domain expertise and media savvy. My role was to amplify IBM's advances in AI ethics and explainable AI, making them resonant to C-suite audiences.

In practice, this meant translating IBM's technical progress into compelling stories, accessible insights, and concrete business value messaging. Consistent messaging was crucial in building trust and credibility with stakeholders. It's a strategy aligned with the “Amplify” stage of our DIVAAA framework – the stage focused on scaling impact through strategic communication. Rather than just issuing press releases or technical papers, IBM invested in thought leadership content, social media engagement, live storytelling, and executive dialogues that highlighted trust, governance, and transparency in AI.

Indeed, I have been lucky. Starting in 2021, IBM realized the power of engaging social media influencers to “amplify opportunities and changes” across its business. I became a key collaborator, particularly for IBM's insurance and financial services initiatives, as with an IBM background, it is evident that I was already recognized as an early AI and cloud innovation adopter.

AI is transforming the banking and financial services industry, enabling institutions to leverage advanced technologies for improved efficiency and customer satisfaction. I worked closely with IBM's international business and marketing teams to craft multi-channel content – from LinkedIn articles and Twitter (X) threads to webinar panels and podcast episodes – all underscored by IBM's values of AI for good.

Storytelling was central: I didn't just share IBM's news, which we all read daily online. I contextualized it, often drawing on personal experiences to build authenticity and connect with the audience. For example, if IBM unveiled a new explainable AI feature, I would discuss the broader significance of AI transparency in business, often quoting IBM's own principles or industry research to reinforce credibility.

This approach positioned IBM's executives as industry visionaries, spotlighting themes like embedded AI, agile development (IBM Garage), and responsible AI deployment. By blending IBM's technical expertise with my independent voice and narrative flair, I hoped to shape unique collaboration messages and build a bridge of trust with the audience. My friends, executives of well-known corporations, followed along, learned about IBM's AI solutions from the outset (questioning their perceptions of things), and gained insight into why those solutions mattered for ethical leadership and innovation. Using real-world examples and case studies further illustrated key points and demonstrated the practical impact of IBM's approach.

IBM's consistent focus on ethical AI and transparency leads the industry toward higher standards of trust and governance. As a leading company in ethical AI, IBM sets a benchmark for responsible innovation and industry best practices.

Foundations of Trust: AI Agents and Models in Practice

Trust is the cornerstone of any successful relationship—whether between humans or between humans and technology. As AI agents and machine learning models become increasingly embedded in the fabric of human life, their ability to earn and maintain trust is paramount. AI agents, designed to autonomously perform specific tasks or make decisions on behalf of users or other systems, must be developed with a clear commitment to ethical principles and transparency. This means that every step of the AI development process—from data collection to model training and deployment—should prioritize explainability and accountability.

For example, in the realm of medical diagnosis, AI models are now assisting medical professionals by analyzing complex data and suggesting potential diagnoses. However, for these AI systems to be truly effective, they must not only deliver accurate results but also provide clear explanations for their recommendations.

When a medical professional can understand the reasoning behind an AI agent's decision, it builds trust in the system and supports better patient care. This approach extends to other domains as well, where AI technologies are being used to support decision making, automate processes, and enhance human capabilities. By embedding ethical principles and transparent processes into the development of AI agents and models, organizations can foster trust, encourage adoption, and ensure that these powerful tools serve the best interests of humanity.

Unlocking Language: Natural Language Processing as a Trust Enabler

Natural Language Processing (NLP) is a key technique in artificial intelligence, enabling machines to bridge the communication gap with humans by understanding, interpreting, and generating human-like text. This capability is foundational for building trust in AI systems, as it allows for open communication and more intuitive interactions between people and technology. Large language models (LLMs) have propelled NLP to new heights, powering virtual assistants, chatbots, and a wide array of language-based AI tools that are now part of daily life.

The ability of LLMs to generate coherent, contextually relevant, and human-like text has unlocked countless applications—from customer service to real-time translation. However, this same power brings significant responsibilities. Concerns around fairness, truthfulness, and the potential for harm—such as the creation of deepfakes or the spread of misinformation—underscore the need for careful oversight.

For instance, while a virtual assistant can streamline business processes and enhance user experience, it must also be designed to avoid bias and provide accurate, reliable information. Building trust in NLP-driven AI systems requires a commitment to transparency, ongoing evaluation, and the responsible development of models and tools.

By prioritizing fairness and truth and fostering open communication between humans and AI, organizations can ensure that NLP technologies enhance human life and support trustworthy decision-making across various applications.

The Generative Leap: Deep Learning and the New Frontier of Ethical AI

The advent of deep learning has ushered in a new era for artificial intelligence, enabling the creation of generative AI models that can produce original content—be it images, videos, or text—that closely mirrors real-world data. This leap in capability has opened doors to unprecedented innovation, allowing businesses and creators to solve problems, automate processes, and generate new ideas at scale. Generative AI, powered by advanced neural networks and machine learning techniques, is now at the forefront of AI technologies, driving solutions in fields ranging from entertainment to healthcare.

Yet, with this innovation comes a host of ethical concerns. The same models that can create lifelike images or compose persuasive text can also be misused to fabricate deepfakes or manipulate public opinion, posing risks to individuals and society at large.

Addressing these challenges requires a responsible approach to AI development—one that involves not only data scientists and engineers but also industry experts, policymakers, and the broader public. By fostering collaboration and open dialogue, the AI community can develop guidelines and safeguards that prioritize human well-being, safety, and trust. Ultimately, the future of generative artificial intelligence depends on our collective ability to balance innovation with responsibility, ensuring that AI systems and models are developed and deployed in ways that benefit humanity and uphold ethical standards.

Multi-Year Campaign Highlights (2021–2025)

Over four years, alongside IBM, I executed a series of high-impact campaigns illustrating this journey from AI functionality to AI trust. Each campaign served as a case study in the “amplified success” model – leveraging events and content to deepen IBM's credibility in AI ethics and explainability, while delivering tangible engagement results. In the early stages of these campaigns, strategies were designed to iteratively improve based on feedback and performance metrics, ensuring continuous refinement and greater impact over time.

-

2021 – AI, Cloud & The IBM Garage: IBM's foray into influencer-led outreach began with a focus on hybrid cloud, AI in financial services, and the IBM Garage innovation framework. I then joined a select group of influencers and other tech ambassadors running quarterly campaigns to highlight IBM's newest capabilities in fintech and insurtech. This was so much fun. I produced a rich mix of written pieces, audio interviews, and video content showcasing how IBM's cloud and AI solutions, underpinned by robust data centers, could be scaled with speed – always tying back to trust and real-world outcomes. This included authoring articles on hybrid cloud and risk management, hosting webinars with IBM subject-matter experts on topics like societal impacts of AI and risk governance, and even moderating a Twitter chat on cloud computing trends. Generative AI also played a role in these efforts by generating synthetic data for training financial models while ensuring data privacy, a critical aspect for industries like banking and insurance. The mandate was clear: use external voices to amplify IBM's message that its technology was innovative and enterprise-ready, with real-world applications across multiple sectors. The outcome validated the approach. Over ~18 months, these campaigns drove significant traffic and engagement. They resulted in at least 5,390 direct clicks to IBM's targeted web pages – potential leads who took action due to the content. Now, monitoring clicks was still very new in 2021 or even having the tech to do so. Dozens of thought leadership posts have accumulated thousands of cumulative likes and shares. Perhaps more importantly, IBM saw that a "trust-first" narrative resonated with its audience. By the end of 2021, the groundwork was laid: IBM's influencer-led social channels and digital events were abuzz with discussions on AI ethics, trustworthy hybrid cloud, and the value of co-creation (IBM Garage), not just raw tech performance. These efforts also informed IBM's future actions, guiding the planning and direction of subsequent campaigns.

Business Value of Ethical AI for the C-Suite

IBM's focused work on AI ethics and explainable AI – amplified through thought leadership – addressed real business concerns and created value for various C-suite stakeholders:

-

For CEOs and Boards: Building AI that is trusted and transparent mitigates strategic and reputational risk. A CEO implementing AI at scale knows that a single ethical lapse or unexplainable failure could erode customer trust or invite regulatory scrutiny. By embedding ethics, IBM not only avoids such pitfalls but actually turns them into a selling point. A recent IBM study pointed out that investing in AI ethics is a strategic business decision with clear returns: avoiding costly fines or breaches (economic ROI) and bolstering brand reputation and customer trust (reputational ROI.) My storytelling style often highlighted these ROI of ethics angles, giving CEOs language to justify ethical AI investments as fuel for innovation and safeguards for the brand. Importantly, ethical frameworks are shaped by the underlying beliefs and values of organizations and societies, which influence how fairness and bias are defined and addressed in AI systems.

-

For CMOs (Chief Marketing Officers): In an era of skepticism toward AI, being a company known for responsible AI is a powerful differentiator. CMOs care about brand perception and customer experience. Our campaigns helped position IBM as a trusted advisor in AI, not just a vendor. By showcasing IBM's principled approach – e.g., quoting IBM’s stance that “imperceptible AI is not ethical AI” – the messaging built confidence among clients that IBM's solutions would be transparent and user-centric. This credibility opens doors for marketing: IBM’s case studies on ethical AI become compelling content that CMOs can use in thought leadership, and the amplified reach (hundreds of millions of impressions) means IBM's voice is prevalent in discussions about AI's future. In short, the influencer collaboration boosted IBM's brand equity as an ethical innovator, making marketing campaigns more authentic and effective. In the context of AI-driven communication, the importance of the word—how words are processed, represented, and understood by AI—was also highlighted, especially in natural language processing applications.

-

For CTOs and Tech Leaders: Technology executives are often enthusiastic about AI's potential but cautious about its pitfalls. IBM's emphasis on explainability directly speaks to CTOs' need for trustworthy AI systems that engineers can monitor and improve. Through my deep-dive content – covering everything from model bias mitigation to AI governance frameworks – CTOs learned how IBM's solutions provide the tools to “ensure the AI's decision-making process is reviewable and explainable in terms people can understand.” This is critical for adoption: when 80% of leaders cite lack of trust as a barrier, offering explainability and auditability becomes key to moving AI pilots into production. Moreover, IBM's thought leadership around AI governance (such as the watsonx.governance toolkit) gives CTOs and CIOs a roadmap for deploying AI at scale with fewer compliance headaches. The value is twofold – faster deployment (since stakeholders trust the technology) and lower risk of unforeseen outcomes. Human supervision remains essential as a safeguard, ensuring that high-risk AI actions are overseen and that ethical standards are maintained. My case study narratives often included how-tos or best practices (e.g., “questions to ask your team about AI transparency”), which provided actionable insight to tech leaders looking to implement responsible AI. Additionally, as each agent learns from new data and feedback, AI systems continuously improve their performance and adapt to changing requirements.

-

For CROs and Risk/ Compliance Officers: Perhaps no group is more relieved by explainable, ethical AI than the risk management teams. IBM's narrative of “AI with accountability” arms CROs with assurance that AI models can be audited and won't operate as inscrutable black boxes. In my coverage of IBM's approach, I frequently touched on AI governance and accountability – from describing IBM's internal AI Ethics Board efforts to endorsing measures like audit trails for AI decisions. By highlighting features such as bias detection and traceability in IBM's tools, the collaboration effectively communicated to risk officers that IBM understands their world. An AI solution that can explain why it denied a loan or flagged a transaction is one that a bank's CRO can approve. Moreover, IBM's stance that “teams should maintain access to a record of an AI's decision process and be amenable to verification” aligns perfectly with emerging AI regulations (e.g., the EU AI Act). Through articles and talks, I underscored how IBM’s proactive governance could help clients stay ahead of regulatory compliance – a direct business value by avoiding legal issues and enabling smoother audits. The difference between AI and human judgment is also emphasized, as AI systems, while powerful, lack the nuanced sense of consciousness and ethical reasoning that humans possess.

In brief, the business value of ethical AI – as championed by IBM – spans financial, reputational, and operational dimensions. Ethical AI isn't just a moral high ground; it drives better adoption rates, stronger customer loyalty, and more sustainable innovation. IBM's own research found that organizations using a holistic AI ethics framework can unlock benefits like reduced costs (through risk avoidance), improved customer satisfaction, and even faster innovation cycles due to enhanced internal capabilities. These are outcomes any C-suite can rally behind. As one of the world's leading technology companies, IBM's global impact in ethical AI sets a benchmark for the industry.

A Blueprint for Building Trust and Credibility in the AI Era

This multi-year collaboration with IBM illustrates how thought leadership and strategic amplification can transform a company's market perception. And this is by using trustworthy advocates. Indeed, all this remains in the realm of alignment. By focusing on AI ethics and explainable AI when leveraging our Amplified Success approach, we collaborated to elevate the conversation from product features to core values and trust. In doing so, IBM did more than promote technology; it built credibility. Each blog post, podcast episode, LinkedIn carousel, or event coverage became a thread in a larger narrative: that IBM is a responsible steward of AI in an age of uncertainty.

For IBM, this narrative shift supported a broader business strategy. It helped us differentiate the brand in a crowded AI market by aligning with what enterprises truly care about – trustworthy outcomes. It reinforced IBM's role as a provider of AI solutions and as a partner in governance and ethical innovation. The measurable outcomes (tens of millions reached, thousands engaged, and concrete leads generated) demonstrate that the message resonated. IBM's voice in high-stakes AI discussions grew stronger, backed by my independent validation and the engaging human stories I hope I told around IBM's technology.

Perhaps most importantly, this case shows a path forward for other organizations. In the era of advanced AI, “amplifying” success means more than touting technical wins – it means earning trust at every step.

As I wrote, “organizations must prioritize explainable, transparent, and responsible AI practices” to maintain trust and ethical decision-making.

By living that philosophy and broadcasting it widely, IBM has seeded a lasting trust in its brand. Our journey stands as a narrative blueprint for combining innovation with integrity and how an amplified success story can inspire an entire industry to marry AI's potential with humanity's values.

Want to know more? Contact us here.

Take your Personalized Plum.io Profile Assessment here.

AND join our growing Authentic Identity (and Leaders That Influence) Movement here.

Activating IBM Stories:

Embeddable AI Set to Capture 36% of the $1 Trillion AI Market by 2030

IBM + Wimbledon 2024: New GenAI Features to Enhance Global Fan Experience

IBM AI for Business: 10 Steps Where watsonx Creates Competitive Advantage Through Trustworthy AI

IBM Partner Ecosystem: Revolutionizing Revenue Models

Embedded Intelligence: Beyond AI in IBM Partner Ecosystem

Uncovering the Power of IBM Generative AI models: It is all about the foundation models

Unlocking AI for Business: A Guide to IBM watsonx

From Explainable AI to Responsible AI: Implementing Ethical Practices in the Digital Age