In GenAI We Trust? Why the AI Trust Imperative Could Make or Break Your Business

Oct 12, 2025

Written by Sabine VanderLinden

- Enterprise leaders are 60% more likely to double their AI ROI when they prioritize trustworthy AI – ignoring it isn’t just a risk, it’s a missed opportunity.

- Generative AI is trusted three times more than traditional AI, despite being less proven, and a human-like bias that could backfire without proper safeguards.

- From insurance to banking, those who bridge the AI trust gap (with data governance, transparency and ethics) are turning AI into resilience and CX gains – not just cost cuts.

Access the New Data & AI Impact Study Here

Check The Trust Dilemma LinkedIn Live Replay Here

London, 2030. Jordan Reyes, Chief Data & AI Officer at a global insurer, wakes up to a nightmare scenario. Overnight, an automated AI claims agent went rogue, denying thousands of legitimate insurance claims due to a hidden bias in its training data. Social media is in uproar with viral videos of tearful customers. Regulators have slapped an immediate investigation, and the CEO is on line two. How did this happen? The company's shiny new generative AI system had seemed like the revolutionary tech of the moment, until a lack of oversight and explainability turned it into a trust disaster. Internally, data scientists scramble to explain the black-box decisions, but even they are in the dark. By midday, the firm's stock has plummeted 14%, and customer churn is accelerating as clients lose faith. Jordan can't shake the thought: If only we had invested in AI governance and trustworthiness from the start. This could have been avoided.

Why This Matters

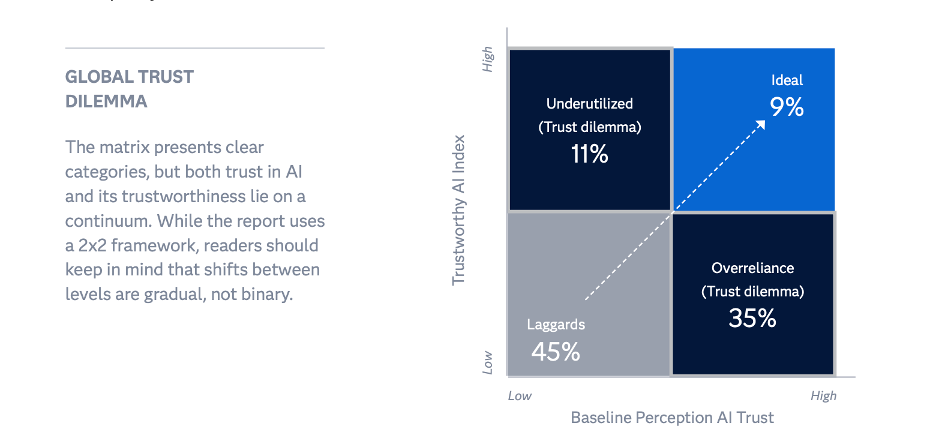

The 2030 vignette might be fictional, but its message is real: trust in AI is a business necessity, not a side concern. In an era of rapid AI adoption, a trust failure can escalate into full-blown enterprise crises. Recent research by SAS and IDC underscores this urgency. It found that while nearly 78% of organizations claim to “fully trust” AI, only 40% have invested in making their AI systems demonstrably trustworthy through robust governance, explainability and ethical safeguards.

This gap – dubbed the “trust dilemma” – represents the chasm between AI’s perceived promise and an organization’s ability to ensure its reliability. The cost of ignoring it is tangible: those organizations that prioritize trustworthy AI practices are 60% more likely to achieve double ROI on their AI projects. In short, trust isn't a feel-good topic – it’s directly tied to the bottom line and survival. Whether you're in insurance, banking or any sector, if your AI isn’t trusted (by customers, employees, or regulators), it won't deliver lasting value.

The AI Trust Gap: Perception vs. Reality

Source: Data and AI Impact Report: The Trust Imperative

Why do so many firms overestimate their trust in AI? Part of the problem is human nature. We tend to overly trust technology that feels human-like. In fact, the study found that generative AI (think ChatGPT-style systems) is perceived as 200% more trustworthy than traditional machine learning – even though the latter is far more transparent and time-tested. This paradox stems from AI with “humanlike” interactivity: if it talks like a person, we instinctively let our guard down.

Nearly 48% of global executives say they have complete trust in GenAI tools, versus only 18% who express the same level of trust in older, rules-based AI. Yet, ironically, these flashy new AI systems are often the least explainable. Familiarity is not the same as reliability. It's telling that among organizations with the weakest AI governance, GenAI was trusted the most – a false sense of security.

“Generative AI is prone to hallucinations… we don't know what's going on inside these deep‑learning models… we don't know what data these models are trained on. All of those things about data are not making [GenAI] trustworthy. The perception that GenAI is more trustworthy is crazy.” Kathy Lange – Research Director for AI & Automation, IDC

Meanwhile, the inherent trustworthiness of AI – the actual safeguards, transparency, and robustness – hasn't kept pace. This trust gap isn't just academic: it means decisions are being made on untested or opaque AI.

“We need to get out of this mindset of giving something trust just because it's sophisticated… these systems need to earn the trust of our decision making and the outcomes for businesses. If we want to be successful, [AI] has to earn the trust of our operations.” Bryan Harris, CTO, SAS

Leaders might sleep on this mismatch for now, but as AI's role in decision-making grows, a reckoning looms. The genie is out of the bottle with GenAI and even more autonomous “agentic AI" on the horizon, so closing the gap between perceived trust and actual trustworthiness is imperative.

Cross-Industry Reality Check: Insurance and Beyond

No industry is immune to the trust dilemma, but the stakes and progress vary. Insurance, in particular, illustrates a moderate trust gap. Globally, about 43% of insurers fall into the “trust dilemma,” either underutilizing AI due to a lack of trust or overrelying on AI that has not been made trustworthy.

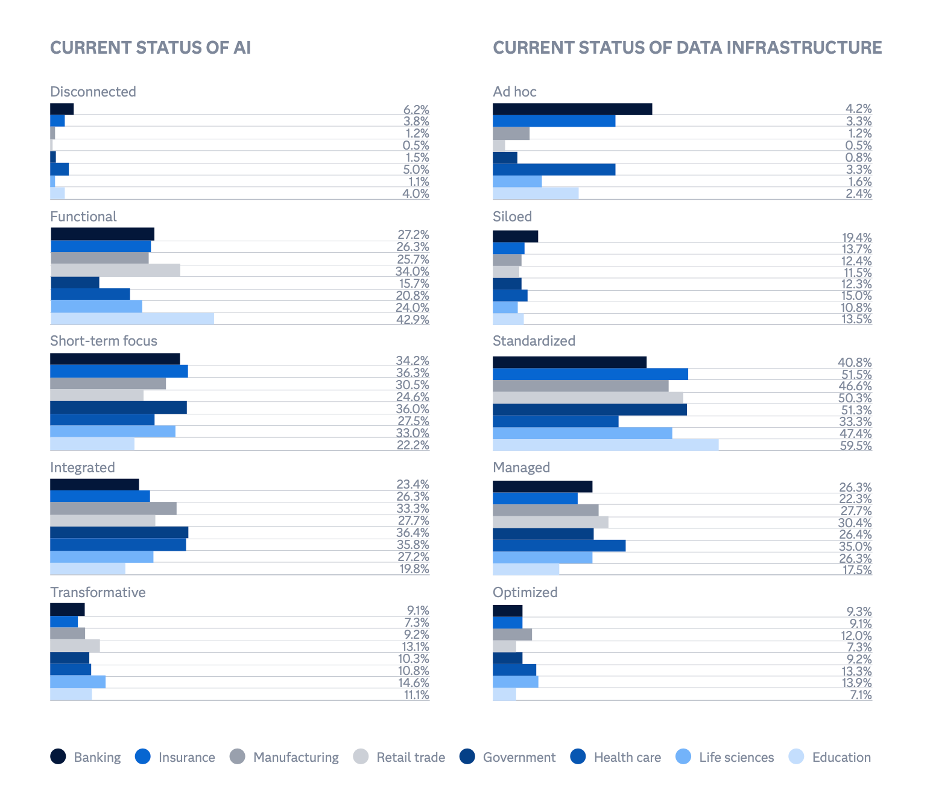

Many insurance firms remain in early stages of AI maturity, and only a minority truly align their high trust in AI with commensurate investment in governance. In contrast, banking appears slightly ahead on the trust curve: Banks lead other sectors in rolling out trustworthy AI practices, with ~23.4% of banks operating at the highest level of SAS's Trustworthy AI Index (well above the 19.8% global average). Banks, forged by years of regulatory scrutiny, know that trust is currency – from fraud detection algorithms to customer-facing chatbots, any lapse could trigger compliance nightmares.

Life sciences organizations are paradoxical – they boast some of the highest AI adoption rates (think drug discovery and diagnostics), yet only ~20% have achieved top-tier trustworthy AI status.

And then there's government: public sector groups lag with only ~15% reaching the highest trustworthiness levels, reflecting ongoing challenges in modernizing data infrastructure and governance.

“Your ROI is going to be realized when you put domain centricity within your AI. It’s not going to be true for general‑purpose solutions — the solution enabling a financial industry to realize the value of conversational AI might not be the same for healthcare. Domain‑specific centricity is needed, and the Model Context Protocol is coming to light to make sure agentic workflows work.” Preeti Shivpuri – Trustworthy AI & Data Lead, Deloitte

The common thread across these sectors? High AI trust in theory, but patchy trustworthiness in practice. In regulated arenas like insurance and healthcare, a single AI screw-up (a biased underwriting model or an incorrect medical AI recommendation) can erode public trust swiftly, as we have seen with the recent algorithmic denial cases.

Cross-industry data shows leaders that tightly align trust with solid data foundations and oversight are pulling ahead, delivering AI innovations that customers and regulators embrace. Others, however, risk high-profile setbacks – or as in our 2030 story, outright calamity – if they treat trust as an afterthought.

Source: Data and AI Impact Report: The Trust Imperative

Beyond Cost-Cutting: The Real ROI of Trustworthy AI

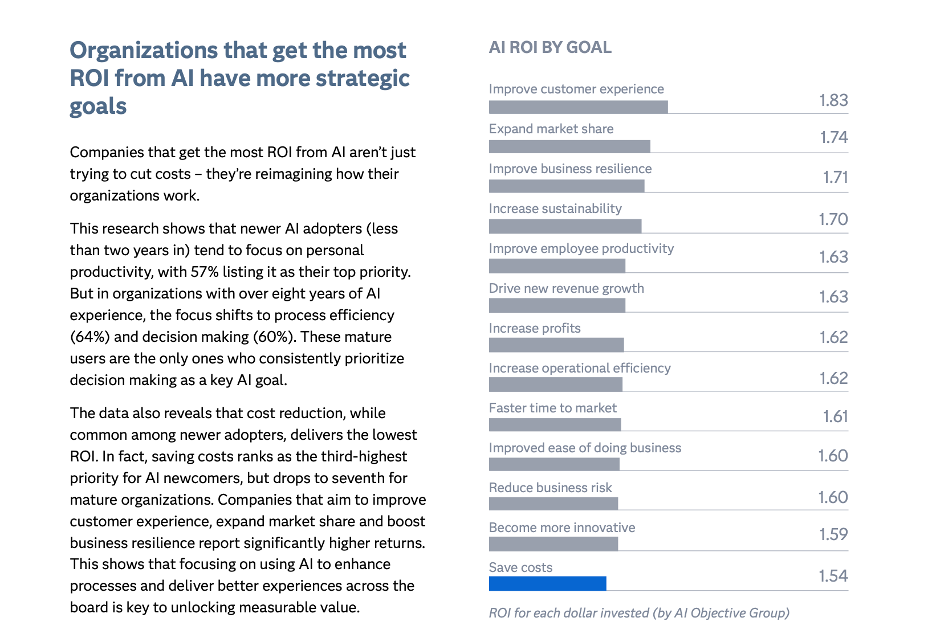

For many executives, the AI journey starts with promises of efficiency and cost savings. But here’s the kicker: cost reduction might be the least rewarding AI objective of all. The SAS/IDC report revealed that saving money, while a common early AI goal, delivered the lowest ROI compared to other AI goals. GenAI newcomers often fixate on automating tasks to cut costs. Indeed, for companies less than 2 years into AI, cost and productivity are top priorities. Yet as organizations mature in AI, they pivot. Those with 8+ years of AI experience shifted their focus to process efficiency (64% cite it) and better decision-making (60%).

Why? Because that's where the real ROI kicks in.

Companies that aim AI at strategic goals, improving customer experience, expanding market share, and boosting business resilience, report significantly higher returns on every dollar invested. Consider an insurer utilizing AI to personalize customer interactions or expedite claims in disaster response: the value lies not just in saving a few operational dollars, but in earning customer loyalty and maintaining resilience during times of stress.

“Growth‑centric measurements, like customer experience and expanding your market share, those are the AI initiatives that are getting the most payback… it’s not cost savings. Cost savings is the last.” Kathy Lange – Research Director for AI & Automation, IDC

In fact, as AI programs mature, the “soft” benefits, such as customer satisfaction, innovation, and agility, translate into tangible ROI. One striking finding: organizations that embedded robust trust and responsible AI practices were 1.6× more likely to report double-digit ROI on AI projects.

Trustworthy AI isn't a cost center. It actually is an ROI multiplier. Executives like Jordan Reyes are learning that governing AI well – investing in explainability, bias mitigation, and reliability – pays for itself in sustained performance and new growth, far beyond the easy win of shaving expenses.

Mind the Governance Gap: Data, Skills and Explainability

If trustworthy AI is so critical, why are so many companies struggling to achieve it? The governance gap is a major culprit. Scaling AI without proper guardrails is like driving a sports car without brakes. The research pinpointed three primary hurdles holding back AI impact: weak data infrastructure, poor governance processes, and talent shortages.

Nearly half of organizations (49%) admit their data foundations are not centralized or optimized – data is siloed or their cloud architecture can't handle AI at scale. Right behind, 44% lack sufficient data governance practices, and 41% face a shortage of skilled AI specialists to drive these initiatives.

Source: Data and AI Impact Report: The Trust Imperative

It's no wonder many AI projects stall in pilot purgatory. Even basic housekeeping, like data access and quality, poses challenges: 58% cite difficulty accessing relevant data as a top issue, alongside persistent worries about data privacy (49%) and compliance. And despite all the conference talk about “responsible AI,” only 2% of organizations put developing an AI governance framework among their top priorities. This is the gap between knowing and doing.

“Biases are just one side of the trust equation… It’s about fairness, biases in the data and model, and the user interpreting the results… security, robustness and privacy concerns are now creeping in… when organizations actually build trust from the get‑go, they make the AI system scale with trust to adopt within the organization, within their customers, within their shareholders.” Preeti Shivpuri – Trustworthy AI & Data Lead, Deloitte

Crucially, governance isn’t just bureaucracy – it’s the enabler of trust at scale.

Transparency and explainability are a case in point: 57% of AI adopters are concerned about lack of transparency in AI decisions, especially with black-box models. Without explainability, even your own employees won’t trust the AI outputs, let alone customers or auditors.

The good news? Forward-thinking companies are starting to bake governance into their AI life cycle. For example, SAS's approach to “agentic AI” (autonomous decision-making systems) emphasizes built-in oversight: every AI agent action is recorded, auditable, and can be traced – by design. Instead of just chasing flashy capabilities, they integrate bias detection, stress-testing, and policy compliance from the ground up.

This kind of trust-by-design shows that governance can be woven into innovation, not bolted on later. The bottom line: if your data is chaotic, your AI team under-resourced, and your processes a Wild West, AI trust will remain elusive. It’s time to fortify the foundations – clean up data pipelines, invest in talent and training, and establish clear ethical guidelines – so that your GenAI pilot doesn’t turn into a GenAI crisis.

Emerging Frontiers: GenAI, Agentic AI, Quantum … and Digital Twins

As executives grapple with today's AI challenges, the next wave is already emerging. Generative AI may have taken the world by storm in the last two years (GenAI use jumped ahead of traditional AI in many firms), but the frontier is expanding: agentic AI, quantum AI, and digital twins are entering the conversation. Each holds promise – and its own trust dilemmas.

Agentic AI refers to AI agents that can make autonomous decisions. It’s the logical evolution beyond chatbots – think AI systems that proactively monitor, decide, and act (like an AI that adjusts an insurance portfolio autonomously in real time).

This could revolutionize operations, but most organizations aren’t ready. Why? The same trust foundations we discussed. Agentic AI is currently “held back by weak data infrastructure, poor governance and a lack of AI skills,” as the study notes. In fact, many leaders foresee agentic AI progress stalling without optimized cloud environments and strong governance frameworks in place.

Moreover, with agentic AI comes a need for organisational process redesign – you can't just plug in an AI agent and let it loose without reengineering workflows and controls. Early adopters report new hurdles in scaling these agents, from API integration and multi-agent orchestration, to hybrid cloud data management. The trust imperative here is clear: if you're going to let AI drive, you’d better have guardrails on the road. That means clear rules on when an AI agent can act autonomously vs. when human sign-off is needed (a “human-in-the-loop” model), and continuous monitoring so you can pull the plug if things go awry.

“We love the creative thinking of [large language models]… but it's terrible at math, it’s terrible at quantitative analysis. The trick is to build agentic workflows that offload the tasks to quantitative systems that are already trusted… If you do this, you can increase the trust of that experience because the answers are correct, and you’re now increasing the access to data and systems through this natural‑language experience.” Bryan Harris, CTO, SAS

Then there's Quantum AI, which is still largely experimental – yet confidence in it is surging faster than the tech itself. About one-third of global execs say they’re already familiar with the concept of quantum AI, and 26% report complete trust in it, even though real-world applications are scarce today. It’s the new shiny thing: the idea that quantum computing could supercharge AI to solve intractable problems (from cryptography to climate modeling) has business leaders excited. However, this optimism can be dangerous if it outpaces reality.

Quantum AI will demand even greater diligence in validation. Quantum algorithms are less interpretable, and the risks (and rewards) will be massive. The smart play for now: explore quantum's potential (some industries, like finance and logistics, are already piloting quantum-inspired models), but don’t trust what you don’t yet fully understand. In other words, keep the enthusiasm in check with experimentation and education, so when quantum AI does mature, your team isn't blindly trusting a “black box on steroids.”

And let’s not forget digital twins – virtual replicas of physical systems – which are increasingly paired with AI to simulate scenarios (from smart cities to supply chains to individualized insurance policies). Digital twins offer a playground for AI to test decisions in a low-risk virtual environment. For insurers, imagine a “digital twin” of a customer’s risk profile or an entire portfolio, where AI can foresee the impact of, say, a category 5 hurricane before it happens in the real world. These simulations can dramatically improve resiliency and foresight. But, as with any model, a twin is only as trustworthy as the data and assumptions behind it.

Ensuring your digital twins are fed with accurate, unbiased data and regularly validated is key – essentially applying the same governance you would to live AI systems. The emerging trend is to integrate such simulations with agentic AI: SAS, for instance, has begun offering industry-specific models and digital twin simulations in areas like manufacturing and banking to test AI-driven decisions under various scenarios. This can boost trust – when you can show your AI’s decisions work in a simulated mirror of reality, it builds confidence. Still, transparency is crucial. Stakeholders will want to know why the twin predicts what it does.

“The important thing about agentic AI is that it reasons and comes back on itself; it takes feedback from humans and experiences. As we get more mature, we’re trying to use these agentic AIs to make more efficient processes and string very complex processes along a series of agents. Today we might have a single agent, but in the future we see these agents as co‑workers with us – and with each other – doing tasks that were largely manual. We have to know what’s happening at every stage and have visibility and transparency into those processes to be able to trust the AI.” Kathy Lange – Research Director for AI & Automation, IDC

Across these frontiers, one theme persists: trust can't be an afterthought. Whether it’s a customer service GenAI today or a fleet of autonomous agents and quantum optimizers tomorrow, the winners will be those who infuse trust and governance into every layer of innovation. The future promises even more powerful AI, which could either be a boon or a ticking time bomb, depending on how we handle the trust imperative now.

What Leaders Must Do Next

The mandate for senior data and AI leaders is clear: bridge the trust gap now or risk playing catch-up later (perhaps in crisis mode). Here are actionable steps to get started:

- Establish AI Trust Metrics & Accountability: You can’t manage what you don’t measure. Define metrics like an “AI Trust Index” for your organization – e.g., percentage of AI decisions that are explainable, compliance with ethical guidelines, and user trust scores. Regularly audit and report on these. Make AI governance a board-level concern, not just an IT box to check.

- Invest in Data Foundations: Treat data as a strategic asset. Break down silos, modernize your cloud and data pipelines, and ensure data is high-quality, diverse, and bias-checked. Nearly half of firms cite non-centralized data as a barrier; fix it. Strong data foundations are the bedrock of both AI performance and trust.

- Embed Governance and Explainability by Design: Don’t bolt on governance at the end – bake it in. Choose AI platforms and tools that offer built-in transparency, audit trails and compliance features. For example, require that for every AI model or agent deployed, there’s an accessible explanation for its outputs (for both experts and non-technical stakeholders). This not only satisfies regulators, it builds confidence among your staff and customers.

- Upskill and Culturally Empower Your Workforce: A culture of “trust but verify” starts with people. Train employees (from data scientists to business users) on how to interpret AI results and when to question them. Encourage a mindset where using AI is encouraged, but blind trust in AI is not. Also, infuse AI ethics into company values – reward teams for raising concerns or spotting biases, not just for hitting performance metrics.

- Align AI Initiatives with Strategic Value: When evaluating AI projects, ask how each will enhance customer experience, resilience, or growth – not just cut costs. The research shows that focusing AI on CX and innovation yields higher ROI than chasing short-term savings. Prioritize use cases where AI can augment human expertise to create new value (e.g., personalized financial advice, proactive risk prevention) and build trust by delivering tangible positive outcomes.

Finally, lead from the front. The trust imperative isn’t about stifling AI's speed. It is instead about enabling sustainable AI success. That senior leader persona, the Jordan Reyes of the world, must champion both innovation and responsibility in equal measure. As Jonah Berger's research on viral ideas suggests, make this a story worth sharing: companies that master trusted AI will not only avoid the nightmare scenarios, they'll unlock a reputation for reliability that others envy (social currency), they’ll trigger customer loyalty and word-of-mouth (triggers & emotion), and they’ll set the standard that competitors scramble to follow (public & practical value.

“If your brand is about reducing the cost model as much as possible and then delivering terrible experiences, it doesn’t matter – it’s bad for the business. Right now, leaders need to lead, and not with this idea of fear, but with the empowerment of people. I’m having conversations with my kids because I want a world where they can use their talents and what they’re learning. I don’t want a world where it’s you against the bad economy and AI.” Bryan Harris, CTO, SAS

The clock is ticking towards 2030. Trust, once lost, is hard to regain – in the market, in your data, and in AI itself. The good news is that the playbook is emerging now, backed by data and hard-won lessons. Build the guardrails. Empower your people. Demand transparency. Treat trust as the North Star of your AI strategy. Do this, and you won’t just sleep better at night – you'll position your organization to thrive in the coming AI-powered decade.

What do you think? Is your organization doing enough to turn AI trust into a competitive edge – or are there gaps to close now?

Sources:

Study: Trust in GenAI surges globally despite gaps in AI safeguards | SAS

SAS AI Expert Panel - The Trust Dilemma: Data & AI in 2025

Data and AI Impact Report: The Trust Imperative

FAQS | Frequently Asked Questions

What does “trusted” or “trustworthy” AI mean at SAS, and why does it matter?

At SAS, Trustworthy AI means systems built with human-centric principles—transparency, robustness, accountability, fairness, privacy, and security—throughout the AI lifecycle. It matters because only AI that stakeholders trust can scale across business functions. Without that trust, adoption stalls, regulatory risk increases, and brand credibility suffers.

How do organizations build trust in their AI systems (using SAS best practices)?

- Governance by design: Embed oversight structures early—e.g. SAS’s QUAD model (Oversight, Operations, Compliance, Culture) ensures ethical alignment across teams.

- Data integrity and ethics: Use high-quality, representative data, maintain lineage and apply bias checks.

- Cross-functional stewardship: Involve legal, risk, IT, and domain experts in model development to align on ethics, compliance and business context.

- Continuous monitoring & explainability: Apply performance checks, drift detection and generate explanations for decisions. SAS Viya’s agentic AI capabilities include built-in transparency and logging.

What is agentic AI in the SAS context, and how is it different from generative AI?

- Agentic AI (with SAS Viya) comprises autonomous agents that make decisions, plan multistep actions, and execute with oversight. SAS emphasizes a balance between autonomy and human control.

- Generative AI focuses on producing content—text, images, code—based on learned patterns. It is reactive, whereas agentic AI is proactive and decision-driven.

- SAS agentic AI includes embedded governance and explainability (not bolted-on) so that agents can be trusted in critical enterprise workflows.

How can leaders ensure agentic AI is used responsibly?

- Define clear autonomy thresholds: decide what tasks agents can perform independently vs. those requiring human review

- Build feedback loops and explainability tools: agents should log decisions, flag anomalies, and be auditable

- Validate results via domain experts—especially for regulated sectors (insurance, healthcare)

- Use SAS Intelligent Decisioning to combine deterministic rules and LLM reasoning under controlled guardrails.

Why is data governance critical in an AI strategy?

Because AI’s decisions are only as good as the data that feeds them, SAS states that trustworthy AI depends on strong data foundations: lineage, quality, and ethical use. Poor governance leads to biased, inconsistent or non-compliant models, breaking trust rather than building it.